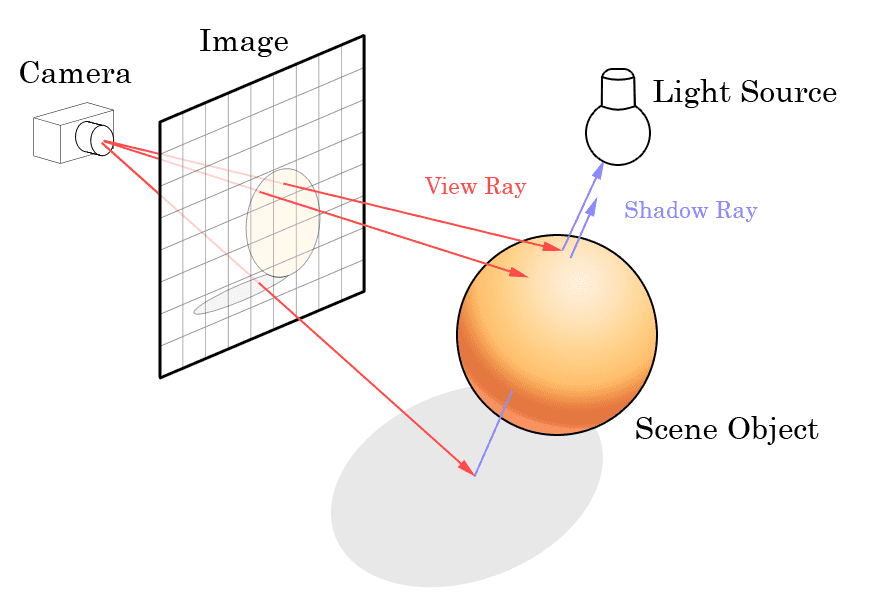

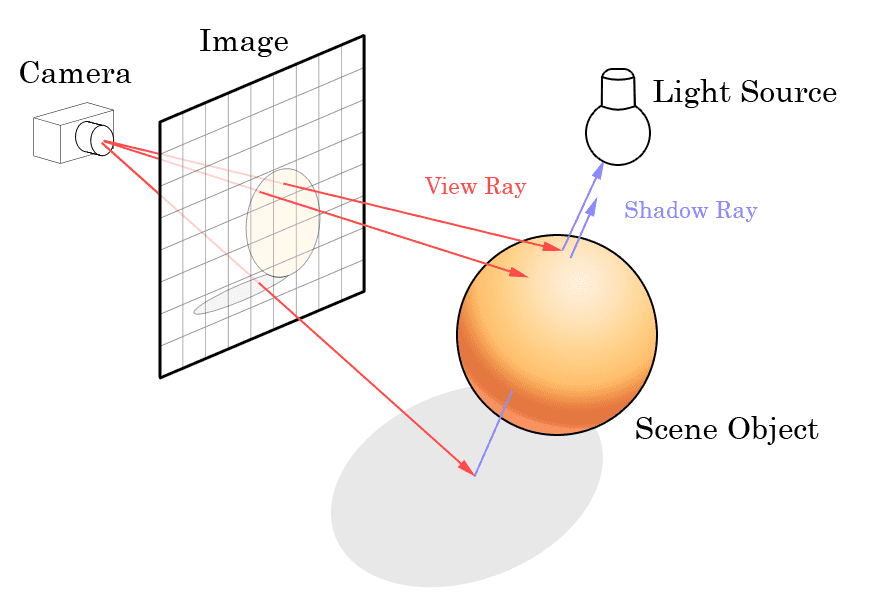

Ray tracing is a technology that calculates the path of the visual field and finds the reflector and the corresponding light source to simulate the lighting effect.

In the real world, when light shines on an object, some of the light is absorbed, some is reflected, and some is seen by the eye, and the presence of the object is recognized when the light reflected from the object and its surroundings is observed by the glasses.

And ray tracing is to display the screen "lens" as the human eye, shooting invisible light to surrender the object in the screen, and then calculate whether it can match the light source in the screen, and according to the calculated light brightness of the screen to display.

This technology concept was originally proposed in 1969 by IBM employee Arthur Appel prototype, in 1979 by Turner Whitted perfected the specific implementation of the idea and the way.

The advent of ray tracing makes it possible to simulate light in the picture without calculating the global light, only need to calculate the part of the eye to view the content and then calculate it, significantly reducing the resources required to render the picture at the same level of light.

Before the implementation of real-time ray tracing technology on personal computer graphics cards, games need to simulate light performance to enhance the game's impact, but the computer's performance can not support the amount of light computing, game developers have come up with a variety of ways to simulate as much as possible in the game to make the light effect convincing.

As early as in the FPS pioneering work of "Doom", the introduction of shooting bullets fire simulation, but this is still very elementary in the enemy's body outside of the fire and the scene did not do more simulation. After the generation of classic "Half-Life 2", the introduction of more scene lighting calculation, each attack can feel the change of light and shadow, which is calculated at the time of shadow changes brought about by the lighting effect, the scene also lacks more lighting calculation.

Then after that, developers found a more economical performance but better approach: pre-rendering. In the production will appear in the game screen of each map, according to the need for light performance pre-calculated corresponding to the screen, to run the game directly loaded the corresponding map, and then calculate a small amount of light in real time, you can achieve a pretty good effect.

Some people may ask, the same computer calculates the picture, why the movie effects, especially in science fiction films can have a more realistic lighting performance? Because they first used ray tracing. Yes, in the personal computer with ray tracing a few years ago, the film industry has introduced ray tracing technology to improve the lighting performance of the picture.

Instead of the intense requirement of rendering 30 or even 60 frames in a second, movie images could take tens of minutes or even hours to render just one frame in a cluster of powerful computers, achieving lighting effects comparable to the real world.

In the real world, when light shines on an object, some of the light is absorbed, some is reflected, and some is seen by the eye, and the presence of the object is recognized when the light reflected from the object and its surroundings is observed by the glasses.

In the real world, when light shines on an object, some of the light is absorbed, some is reflected, and some is seen by the eye, and the presence of the object is recognized when the light reflected from the object and its surroundings is observed by the glasses.

And ray tracing is to display the screen "lens" as the human eye, shooting invisible light to surrender the object in the screen, and then calculate whether it can match the light source in the screen, and according to the calculated light brightness of the screen to display.

And ray tracing is to display the screen "lens" as the human eye, shooting invisible light to surrender the object in the screen, and then calculate whether it can match the light source in the screen, and according to the calculated light brightness of the screen to display.

This technology concept was originally proposed in 1969 by IBM employee Arthur Appel prototype, in 1979 by Turner Whitted perfected the specific implementation of the idea and the way.

The advent of ray tracing makes it possible to simulate light in the picture without calculating the global light, only need to calculate the part of the eye to view the content and then calculate it, significantly reducing the resources required to render the picture at the same level of light.

This technology concept was originally proposed in 1969 by IBM employee Arthur Appel prototype, in 1979 by Turner Whitted perfected the specific implementation of the idea and the way.

The advent of ray tracing makes it possible to simulate light in the picture without calculating the global light, only need to calculate the part of the eye to view the content and then calculate it, significantly reducing the resources required to render the picture at the same level of light.

Before the implementation of real-time ray tracing technology on personal computer graphics cards, games need to simulate light performance to enhance the game's impact, but the computer's performance can not support the amount of light computing, game developers have come up with a variety of ways to simulate as much as possible in the game to make the light effect convincing.

Before the implementation of real-time ray tracing technology on personal computer graphics cards, games need to simulate light performance to enhance the game's impact, but the computer's performance can not support the amount of light computing, game developers have come up with a variety of ways to simulate as much as possible in the game to make the light effect convincing.

As early as in the FPS pioneering work of "Doom", the introduction of shooting bullets fire simulation, but this is still very elementary in the enemy's body outside of the fire and the scene did not do more simulation. After the generation of classic "Half-Life 2", the introduction of more scene lighting calculation, each attack can feel the change of light and shadow, which is calculated at the time of shadow changes brought about by the lighting effect, the scene also lacks more lighting calculation.

As early as in the FPS pioneering work of "Doom", the introduction of shooting bullets fire simulation, but this is still very elementary in the enemy's body outside of the fire and the scene did not do more simulation. After the generation of classic "Half-Life 2", the introduction of more scene lighting calculation, each attack can feel the change of light and shadow, which is calculated at the time of shadow changes brought about by the lighting effect, the scene also lacks more lighting calculation.

Then after that, developers found a more economical performance but better approach: pre-rendering. In the production will appear in the game screen of each map, according to the need for light performance pre-calculated corresponding to the screen, to run the game directly loaded the corresponding map, and then calculate a small amount of light in real time, you can achieve a pretty good effect.

Then after that, developers found a more economical performance but better approach: pre-rendering. In the production will appear in the game screen of each map, according to the need for light performance pre-calculated corresponding to the screen, to run the game directly loaded the corresponding map, and then calculate a small amount of light in real time, you can achieve a pretty good effect.

Some people may ask, the same computer calculates the picture, why the movie effects, especially in science fiction films can have a more realistic lighting performance? Because they first used ray tracing. Yes, in the personal computer with ray tracing a few years ago, the film industry has introduced ray tracing technology to improve the lighting performance of the picture.

Some people may ask, the same computer calculates the picture, why the movie effects, especially in science fiction films can have a more realistic lighting performance? Because they first used ray tracing. Yes, in the personal computer with ray tracing a few years ago, the film industry has introduced ray tracing technology to improve the lighting performance of the picture.

Instead of the intense requirement of rendering 30 or even 60 frames in a second, movie images could take tens of minutes or even hours to render just one frame in a cluster of powerful computers, achieving lighting effects comparable to the real world.

Instead of the intense requirement of rendering 30 or even 60 frames in a second, movie images could take tens of minutes or even hours to render just one frame in a cluster of powerful computers, achieving lighting effects comparable to the real world.